Abstract

Development of validated physics surveys on various topics is important for investigating the extent to which students master those concepts after traditional instruction and for assessing innovative curricula and pedagogies that can improve student understanding significantly. Here, we discuss the development and validation of a conceptual multiple-choice survey related to magnetism suitable for introductory physics courses. The survey was developed taking into account common students' difficulties with magnetism concepts covered in introductory physics courses found in our investigation and the incorrect choices to the multiple-choice questions were designed based upon those common student difficulties. After the development and validation of the survey, it was administered to introductory physics students in various classes in paper–pencil format before and after traditional lecture-based instruction in relevant concepts. We compared the performance of students on the survey in the algebra-based and calculus-based introductory physics courses before and after traditional lecture-based instruction in relevant magnetism concepts. We discuss the common difficulties of introductory physics students with magnetism concepts we found via the survey. We also administered the survey to upper-level undergraduates majoring in physics and PhD students to benchmark the survey and compared their performance with those of traditionally taught introductory physics students for whom the survey is intended. A comparison with the base line data on the validated magnetism survey from traditionally taught introductory physics courses and upper-level undergraduate and PhD students discussed in this paper can help instructors assess the effectiveness of curricula and pedagogies which is especially designed to help students integrate conceptual and quantitative understanding and develop a good grasp of the concepts. In particular, if introductory physics students' average performance in a class is significantly better than those of students in traditionally taught courses described here (and particularly when it is comparable to that of physics PhD students' average performance discussed here), the curriculum or pedagogy used in that introductory class can be deemed effective. Moreover, we discuss the use of the survey to investigate gender differences in student performance.

Export citation and abstract BibTeX RIS

Original content from this work may be used under the terms of the Creative Commons Attribution 3.0 licence. Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

Introduction

Research-validated multiple-choice tests can be useful tools for investigating student learning in physics courses both when traditional lecture-based instruction is used or when innovative curricula and pedagogies are used. Such multiple-choice tests are easy and economical to administer and grade, have objective scoring, and are amenable to statistical analysis that can be used to compare student populations or instructional methods. A major drawback is that the thought processes are not revealed by the answers alone. However, when combined with student interviews, well-designed multiple-choice tests can be powerful tools for educational assessment.

A number of multiple-choice tests have been developed and widely used by physics instructors around the world to measure students' conceptual learning in physics courses. The most commonly used research-based multiple-choice test for introductory mechanics is the force concept inventory [1–4]. The research-based surveys focusing on other topics in introductory mechanics include the test of understanding graphs in kinematics, energy and momentum conceptual survey, rotational and rolling motion conceptual survey and rotational kinematics of a rigid body about a fixed axis [5–9]. In introductory electricity and magnetism (E&M), the brief electricity and magnetism assessment and the conceptual survey of electricity and magnetism (CSEM) are two broad surveys that cover most E&M concepts except circuits [10, 11]. In addition, other surveys for introductory E&M which focus on specific topics such as direct current resistive electrical circuits and symmetry and Gauss's law have been developed [12, 13].

Electricity and magnetism are important topics covered in many introductory physics courses in high school and college. Therefore, several prior investigations have focused on the difficulties introductory physics students have with electricity and magnetism and instructional strategies that can help students learn those concepts well [14–31] and develop robust problem solving and reasoning skills [32–39].

Since research-validated multiple-choice surveys (or tests) can be valuable in assessing whether a particular curriculum or pedagogy is effective in helping introductory physics students learn the fundamental concepts in magnetism, we developed and validated a 30 item multiple-choice test on magnetism (called the Magnetism Conceptual Survey or MCS). This survey was designed to assess introductory physics students' understanding of magnetism concepts and to explore the difficulties students have in interpreting magnetism concepts and in correctly identifying and applying them in different situations. The identification of student difficulties with various topics in magnetism can be helpful in designing instructional tools to address the difficulties. The survey can also be used to investigate if the difficulties are universal and if there is a correlation with instructional approach, instructor or student preparation and background, e.g., whether students are enrolled in a calculus-based or algebra-based introductory physics courses or whether they are female or male since gender differences have been found in prior studies in physics and mathematics education [40–48]. Moreover, we present base line results from traditional lecture-based introductory physics courses so that instructors in courses covering the same concepts but using innovative curricula and pedagogies can compare their students' performance with those provided in this paper to gauge the relative effectiveness of their instructional approach. We note that the magnetism survey discussed here assumes that students are taught magnetism after learning introductory mechanics and electrostatics concepts and the student difficulties section below also describes (as relevant within various categories) how some of the difficulties we found stem from student difficulties with mechanics or analogous difficulties in electrostatics.

The Magnetism Conceptual Survey (MCS) design and validation

The MCS test focuses on topics in magnetism covered in traditional calculus-based or algebra-based introductory physics courses in high school and college before Faraday's and Lenz's laws (not including Faraday's and Lenz's laws). During the test design, we paid particular attention to the important issues of reliability and validity [10]. Reliability refers to the relative degree of consistency in scores between testing if the test procedures are repeated in immediate succession for an individual or group [10]. On a reliable survey, students with different levels of knowledge of the topic covered should perform according to their mastery (instead of being able to guess the answers). In our research, we used the data to perform statistical tests to ensure that the survey is reliable using the classical test theory [10]. For example, the reliability index measures the internal consistency of the whole test [10]. One commonly used index of reliability is KR-20, which is calculated for the survey as a whole [10]. The KR-20 is high for a survey in which students are not guessing and students with mastery of material consistently perform well across the questions while others do not.

Validity refers to the appropriateness of the test score interpretation [10]. A test must be reliable for it to be valid for a particular use. The MCS survey is valid for assessment at the group level regarding whether certain curricula or pedagogies used in introductory physics courses are successful in helping students learn magnetism concepts. The design of the MCS test began with the development of a test blueprint that provided a framework for planning decisions about the desired test attributes. We tabulated the scope and extent of the content covered and the level of cognitive complexity desired. During this process, we consulted with several faculty members who teach introductory E&M courses routinely about the magnetism concepts they believed their students should know.

We classified the cognitive complexity using a simplified version of Bloom's taxonomy: specification of knowledge, interpretation of knowledge and drawing inferences, and applying knowledge to different situations. Then, we outlined a description of conditions/contexts within which the various concepts would be tested and a criterion for good performance in each case. The tables of content and cognitive complexity along with the criteria for good performance were shown to three physics faculty members at the University of Pittsburgh (Pitt), who teach introductory physics courses regularly, for review. Modifications were made to the weights assigned to various concepts and to the performance criteria based upon the feedback from the faculty about their appropriateness. The performance criteria were used to convert the description of conditions/contexts within which the concepts would be tested to make free-response questions. These free-response questions required introductory physics students to provide their reasoning with the responses.

The multiple-choice questions (called items in test design literature) were then designed. The responses to the free-response questions and accompanying student reasoning along with individual interviews with a subset of students guided us in the design of good distracter (alternative) choices for the multiple-choice questions. In particular, we used the most frequent incorrect responses in the free-response questions and interviews as a guide for making the distracter choices. Four alternative choices have typically been found to be optimal, and choosing the four distracters to conform to the common difficulties found in introductory physics students' responses increased the discriminating properties of the test items. Three physics faculty members were asked to review the multiple-choice questions and comment on their appropriateness and relevance for introductory physics courses and to detect ambiguity in item wording. They went over several versions of the survey to ensure that the wording was not ambiguous. Moreover, several introductory students were asked to answer the survey questions individually in interviews in which they were asked to think aloud to ensure that the questions were not misinterpreted by them and the wording was clear. During these interviews we did not disrupt students' thought processes while they answered the questions and only at the end we asked them for clarification of the points they had not made earlier.

Administration of the survey

The final version of the entire MCS can be downloaded from here [49]. This final version of the MCS survey was administered in paper–pencil format both as a pre-test (before instruction in those concepts) and a post-test (after instruction in all relevant concepts) to a large number of students at Pitt. These students were from three traditionally taught algebra-based classes (mostly taken by students majoring in bioscience), and eight regular sections (in contrast to the honors section) of calculus-based introductory classes. The calculus-based course is taken primarily by undergraduate majors in engineering, physics, chemistry and mathematics. In the analysis presented here for the reliability index KR-20, item difficulty, discrimination indices, and point biserial coefficients of the items [10], we kept only those students who took the survey both as a pre-test and a post-test except in one algebra-based physics class. In that algebra-based introductory class, most students who worked on the survey did not provide their names and seven more students took the post-test than the pre-test. Thus, in the algebra-based course, 267 students took the pre-test and 273 students took the post-test. In the regular section of calculus-based courses, 575 students took both the pre-test and the post-test. We note however that even if we do not match the pre-test and post-test scores, the findings presented here are virtually unchanged.

Pre-tests were administered in the first lecture or recitation class at the beginning of the semester in which students took introductory second semester physics with E&M as a major component of the course. The purpose of the pre-test was to understand what pre-conceptions students had about these concepts from their everyday experiences with magnetism concepts or from any previous course they may have taken in high school that included these topics. The students were asked to try to answer the pre-test questions to the best of their ability and they were not allowed to keep the survey. The post-tests were administered in the recitation classes after traditional lecture-based instruction in all relevant concepts on magnetism covered in the MCS. Students were typically allowed to work on the survey for a full class period (40–50 min) during both the pre-test and post-test so that all students had sufficient time to complete the survey.

The KR-20 for the combined algebra-based and calculus-based data is 0.83, which is very good by the standards of test design [10]. The MCS test was also administered to 42 physics PhD students enrolled in a first year course for teaching assistants to benchmark the performance that can be expected of the introductory physics students. The average score for the PhD students is 83% with a KR-20 of 0.87.

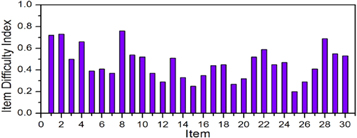

The item difficulty (fraction correct) is a measure of the difficulty of a single test question or item [10]. It is calculated by taking the ratio of the number correct responses on the question to the total number of students who attempted to answer the question. Figure 1 shows the difficulty index for each item in the survey for the sample of 848 students obtained by combining the algebra-based and calculus-based classes in post-test. The average difficulty index is 0.46, which falls within the desired criterion range for standardized tests [10]. The average difficulty index for the algebra-based class is 0.45 and for the calculus-based class, 0.53.

Figure 1. Difficulty index (fraction correct) for various questions (items) on the MCS test after traditional instruction (post-test).

Download figure:

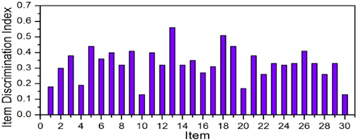

Standard image High-resolution imageThe item discrimination index measures the discriminatory power of each question on a test [10]. A majority of questions on a test should have relatively high discrimination indices to ensure that the test is capable of distinguishing between students with a strong versus weak mastery of the material. A large discrimination index for an item indicates that students who performed well on the entire test overall also performed well on that question. The average item discrimination index for the combined 848 students sample including all items on the MCS test is 0.33, which is very good from the standards of test design [10]. Figure 2 shows that for this sample the item discrimination indices for 22 items are above 0.3 on the post-test. The average discrimination index for the algebra-based class is 0.29 and for the calculus-based class is 0.33.

Figure 2. Discrimination index for the MCS test questions after traditional instruction (post-test).

Download figure:

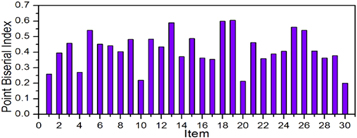

Standard image High-resolution imageThe point biserial coefficient is a measure of consistency of a single test item with the whole test [10]. It is a form of correlation coefficient which reflects the correlation between students' scores on an individual item and their scores on the entire test. The widely adopted criterion for a reasonable point biserial index is 0.2 or above [10]. The average point biserial index for the MCS test is 0.42 and figure 3 shows that all items have a point biserial index equal to or above 0.2 on the post-test, which implies that all questions are reasonable.

Figure 3. Point biserial coefficient for the MCS test questions after traditional instruction (post-test).

Download figure:

Standard image High-resolution imageDiscussion of student difficulties

The magnetism topics covered in the test include magnitude and direction of the magnetic field produced by current carrying wires, forces on current carrying wires in an external magnetic field, force and trajectory of a charged particle in an external magnetic field, work done by the external magnetic field on a charged particle (which is zero), magnetic field produced by bar magnets, and force between static bar magnets and static charges (which is zero). Table 1 shows the concepts that were addressed by the various questions in the test. The classification in table 1 is one of the several ways of grouping together the MCS questions. This classification does not necessarily reflect the manner in which physics experts would categorize the MCS test questions. For example, one of the concept categories shown in table 1 in which we placed Questions 9, 13, and 17 is 'motion doesn't necessarily imply force'. As shown in tables A1 and A2 in the

Table 1. The concepts covered in the MCS test and the questions that addressed them.

| Concepts | Question numbers |

|---|---|

| Magnetic force on bar magnets | 1, 2, 3 |

| Distinguishing between charges and magnetic poles | 4, 5, 6 |

| Direction of magnetic field inside/outside a bar magnet | 7, 8 |

| Forces on a charged particle in a magnetic field and other fields | 15, 18, 19, 26 |

| Motion does not necessarily imply force | 9, 13, 17 |

| Direction of motion or magnetic force on a moving charged particle in a magnetic field | 10, 14, 16, 17 |

| Work done by magnetic force | 11, 12 |

| Forces on current carrying wires in a magnetic field | 21, 25, 27 |

| Distinguishing between current carrying wires pointing into/out of the page and static point charges | 22, 23, 24 |

| Magnetic field generated by current loops | 28, 29, 30 |

The average score in the algebra-based courses was 24% on the pre-test and 41% on the post-test. In the calculus-based courses, the average pre-test and post-test scores were 28% and 49%, respectively. Tables A1 and A2 in the

Below, we discuss students' difficulties with concepts on magnetism found via the MCS test.

Magnetic force between bar magnets

Questions 1–3 are related to magnetic force on bar magnets. A common distracter in Question 1 was option (e). Some introductory physics students claimed that the net force on the middle bar magnet should be zero because the forces due to the bar magnets on the sides should cancel out. Interviews suggest that when some students saw that there is a magnet on each side of a magnet they concluded that the forces due to the magnets are opposite to each other and therefore there is no net force on the middle bar magnet. These students often did not draw a free body diagram to determine the directions for forces due to the individual bar magnets. From the students' written responses and interviews it appears that many students did not consider Newton's Third Law when they answered the questions. In Question 2, whether there is a third bar magnet or not, due to Newton's Third Law, the force magnet 1 exerts on magnet 2 should be equal in magnitude to the force magnet 2 exerts on magnet 1. However, the appearance of the third bar magnet made many students think that the force with which magnet 1 repels magnet 2 is half or twice the force with which magnet 2 repels magnet 1. On Question 3, the most common incorrect choice was option (b). Interviews confirm that because it is mentioned in the problem that magnet 1 is twice as strong as magnet 2, some students intuitively thought that the stronger the bar magnet, the larger the force due to that bar magnet.

Distinguishing between magnetic poles and charges

Questions 4–6 exemplified students' difficulties in distinguishing between the north and south poles and localized positive and negative charges or between an electric dipole and a magnetic dipole. When considering these types of questions, many introductory physics students claimed that a bar magnet is an electric dipole with positive and negative charges located at the north and south poles. Thus, they incorrectly believed that a static bar magnet can exert a force on static charges or conductors. In Question 4, some interviewed students considered the north pole of the bar magnet as consisting of positive charges, which can induce negative charges on the conductor. Hence, they claimed that the bar magnet exerts a force on the conductor toward the magnet. In Question 5, students had a similar difficulty. On the post-test, more than 40% of the students chose distracter option (e). Interviews suggest that many considered the north-pole as a positive charge which repels another positive charge. This type of confusion was even more prevalent in Question 6. On the post-test, 38% of the students in calculus-based courses answered Question 6 correctly, which is not significantly different from the pre-test performance of 22% (before instruction). A common incorrect response was option (e), i.e., there is no net force on charge q0. Both written explanations and interviews suggest that students often considered a bar magnet as an electric dipole. When the interviewer asked some students whether a magnet will retain its magnetic properties when broken into two pieces by cutting it at the center, most of them claimed that the magnetic properties will be retained, and each of the two smaller pieces will become a magnet with opposite poles at the ends. When the interviewer asked how that is possible if they had earlier claimed that the north and south poles are essentially localized opposite charges, some students admitted they were unsure about how to explain the development of opposite poles at each end of the smaller magnets but others provided creative explanations about how charges will move around while the magnet is being cut into two pieces to ensure that each of the cut pieces has a north and south pole. During the interviews, we explicitly probed how students developed the misconception that a bar magnet must have opposite charges localized at the two poles because when a child plays with a bar magnet, he/she does not necessarily think about localized electric charges at the two ends of the magnet. Some interviewed students claimed that they learned it from an adult while he/she tried to explain why a bar magnet behaves the way it does or they had heard it on a television program. More research is needed to understand how and at what age college students developed such incorrect notions.

Magnetic field of a bar magnet

Questions 7 and 8 assess students' understanding of magnetic field inside or outside a bar magnet. In Question 7, the most common distractor was option (e). Students often asserted that the magnetic field at the midpoint between the north and south poles should definitely be zero. Some of them even believed that there should be no magnetic field inside of the bar magnet. In addition, students' responses to Question 8 revealed another misconception. They claimed that the magnetic field should be zero not only at the midpoint between the poles, but also at any points on the perpendicular bisector of the bar magnet including those points outside of the magnet.

Directions of velocity of a charged particle, magnetic field and magnetic force

Students' answers to some MCS test questions suggest that when they were given the directions of two of the quantities out of velocity of the particle, magnetic field and magnetic force, they had difficulty in determining the direction of the third quantity. Tables A1 and A2 in the

Work done by the magnetic force

Questions 11 and 12 are related to the work done by the magnetic field. They also probe whether students understand that the magnetic force is perpendicular to the velocity of a charged particle and only changes the direction of motion of the particle. On Questions 11 and 12, several distracters were popular. Some students claimed that the work done by the magnetic force is always positive while others thought that it can be positive or negative depending on the charge or the orientation of the initial velocity. Some students referred to the equation relating work to the inner product of force and displacement to solve for the work done by the magnetic force. However, they did not realize that the magnetic force is always perpendicular to the direction of motion and instead often thought that the directions of force and velocity of the charged particle can form any angle and the magnetic force can do work.

Movement of a charged particle in a magnetic field does not necessarily imply force

Previous research in mechanics shows that students often believe that motion always implies a force in the direction of motion [1–4]. Our findings are consistent with previous research. On Question 9, a common incorrect option was (c), i.e., there is a force due to the electron's initial velocity. Another common incorrect option was (d). In response to the Question 13, students performed significantly better on the post-test compared to pre-test. The most common incorrect response to Question 13 was option (c) followed by options (d) and (e). Moreover, interviewed students were asked such questions in the context of a lecture-demonstration related to the effect of bringing a powerful bar magnet from different angles towards an electron beam (including the case in which the magnetic field and velocity vectors are collinear as in Question 13). The interviewed students were explicitly asked to predict the outcome for both the electron beam and a beam of positive charges. Those who incorrectly selected option (c) in Question 13 explained that the particle will slow down because the magnetic field is opposite to the direction of velocity and this implies that the force on the charged particle must be opposite to the velocity. Of course, this prediction could not be verified by performing the experiment and left students in a confused state. Students who selected options (d) or (e) often incorrectly remembered the right hand rule. They justified their response by providing animated explanations such as 'Oh, the electron wants to get out of the way of the magnetic field and that's why it bends' in a magnetic field. They were surprised to observe that the deflection of the electron beam is negligible when the strong magnet has its north-pole or south-pole pointing straight at the beam.

Net force on a charged particle or a current carrying wire in a magnetic field

Questions 15, 19 and 26 are related to the net force on charged particles in a magnetic field and another field (e.g., an electric or gravitational field). In these questions, students need to be able to figure out, e.g., the directions of magnetic force and electric force on a charge and then reflect upon whether the forces are comparable based on the information provided. Questions 15 and 19 are similar types of questions and the differences are in the directions of the magnetic field and electric field in each question. Question 26 is similar to Question 19 in which the electric field it is replaced with gravitational field. Students' response distributions for these questions were similar. As tables A1 and A2 in the

In Question 18, the directions of electric field and magnetic field are parallel. Many students' answers to this question reveal another aspect of their difficulties. Table A2 shows that 51% of calculus-based students provided the correct response on the post-test, three times the number on the pre-test. The most common incorrect option in response to Question 18 was option (c). Written explanations and interviews suggest that students had learned about velocity selectors in which the electric and magnetic fields are perpendicular to each other (not the situation shown here) and to the velocity of the particles and there is a particular speed v = E/B for which the net force on the particle is zero. These students had over-generalized this situation and had simply memorized that whenever the velocity is perpendicular to both the electric and magnetic fields, the net force on the charged particle is zero. They neglected to account for the fact that the magnitudes of electric and magnetic fields must satisfy v = E/B and the two fields must be perpendicular to each other and oriented appropriately for the net force on the particle to be zero. During interviews, students were often not systematic in their approach and talked about the net effect of the electric and magnetic fields simultaneously rather than drawing a free body diagram and considering the contributions of each field separately first. A systematic approach to analyzing this problem involves considering the direction and magnitude of the electric force  and magnetic force

and magnetic force  individually and then taking their vector sum to find the net force. Some interviewed students who made guesses based upon their recollection of the velocity selector example discussed in the class claimed that the net force on the particle is zero for this situation. They were asked by the interviewer to draw a free body diagram for the case in which the charged particle is launched perpendicular to both fields. Some of them who knew the right hand rule for the magnetic force and the fact that the electric field and force are collinear

individually and then taking their vector sum to find the net force. Some interviewed students who made guesses based upon their recollection of the velocity selector example discussed in the class claimed that the net force on the particle is zero for this situation. They were asked by the interviewer to draw a free body diagram for the case in which the charged particle is launched perpendicular to both fields. Some of them who knew the right hand rule for the magnetic force and the fact that the electric field and force are collinear  were able to draw correct diagrams showing that the electric force and magnetic force are not even collinear. One of these students exclaimed: 'I do not know what I was thinking when I said that the net force is zero in this case. These two are (pointing at the electric and magnetic forces in the free body diagram he drew) perpendicular and can never cancel out'. Such discussions with students suggest the need to help them learn a systematic approach to solving problems so that they do not treat a conceptual problem (such as those on MCS) as a guessing task [1–4].

were able to draw correct diagrams showing that the electric force and magnetic force are not even collinear. One of these students exclaimed: 'I do not know what I was thinking when I said that the net force is zero in this case. These two are (pointing at the electric and magnetic forces in the free body diagram he drew) perpendicular and can never cancel out'. Such discussions with students suggest the need to help them learn a systematic approach to solving problems so that they do not treat a conceptual problem (such as those on MCS) as a guessing task [1–4].

Questions 25 and 27 are related to the net force of one on two parallel current carrying wires in a magnetic field. Students' responses to these two problems reflect the difficulties that are similar to those shown in Questions 15, 19 and 26. Question 25 was the most difficult question in the test. On post-test, only 21% of the calculus-based students responded correctly. On the post-test, options (b) and (c) were the most common incorrect distracters. Interviews suggest that students answered this question incorrectly either because they only considered the effect of one force or because they randomly chose a direction for the force since they were unable to compare the magnitude of the forces. Unlike previous problems asking for the direction of net force, Question 27 asks students to predict the possible direction of the magnetic field to make the net force zero. Student performance on Question 27 was better than Question 25 and all distracters were popular.

Distinguishing interaction between parallel current carrying wires versus interaction between static charges

We observed that when students were presented with parallel current carrying wires with current flowing into or out of the page, they often failed to reason about the magnetic field or magnetic force due to the wires by using the right hand rule. Students' responses to Questions 22–24 reflect this difficulty. Question 22 is about the direction of the force on a current carrying wire due to a parallel wire carrying current in the opposite direction. The most common incorrect response (option (d)) was chosen based on the incorrect reasoning that the wires would attract each other. Even in the individual lecture-demonstration based interviews, many students explicitly predicted that the wires would attract and come closer to each other. When asked to explain their reasoning, students often cited the maxim 'opposites attract' and frequently made an explicit analogy between two opposite charges attracting each other and two wires carrying current in opposite directions attracting each other. In lecture-demonstration based interviews, when students performed the experiment and observed the repulsion, none of them could reconcile the differences between their initial prediction and observation. One difficulty with Question 22 is that there are several distinct steps (as opposed to simply one step) involved in reasoning correctly to arrive at the correct response. In particular, understanding the repulsion between two wires carrying current in opposite directions requires comprehension of the following issues: (I) there is a magnetic field produced by each wire at the location of the other wire. (II) The direction of the magnetic field produced by each current carrying wire is given by a right hand rule. (III) The magnetic field produced by one wire will act as an external magnetic field for the other wire and will lead to a force on the other wire whose direction is given by a right hand rule. None of the interviewed students was able to explain the reasoning systematically. What is equally interesting is the fact that none of the interviewed students who correctly predicted that the wires carrying current in opposite directions would repel could explain this observation based upon the force on a current carrying wire in a magnetic field even when explicitly asked to do so. A majority of them appeared to have memorized that wires carrying current in opposite directions repel. They had difficulty applying both right hand rules systematically to explain their reasoning: the one about the magnetic field produced by wire 2 at the location of wire 1 and the other one about the force on wire 1 due to the magnetic field. This difficulty in explaining their prediction points to the importance of asking students to explain their reasoning. On Question 24, the most common incorrect response was option (c). Similar to Question 22, students often did not follow the steps mentioned above to figure out the directions of magnetic forces and simply used 'opposites attract'. As can be seen from tables A1 and A2, for Question 23, option (e) was the most common incorrect response on both the pre-test and post-test. These students claimed that the magnetic field is zero at point P between the wires. In written explanations and individual interviews, students who claimed that the magnetic field is zero at point P between the wires carrying opposite currents argued that at point P, the two wires will produce equal magnitude magnetic fields pointing in opposite directions, which will cancel out. During interviews, when students were explicitly asked to explain how to find the direction of the magnetic field at point P, they had difficulty figuring out the direction of the magnetic field produced by a current carrying wire using the right hand rule and in using the superposition principle to conclude correctly that the magnetic field at point P is upward. Interviews suggest that for some students, confusion about analogous concepts in electrostatics was never cleared and could have exacerbated to difficulties related to magnetism. For example, several students drew explicit analogy with the electric field between two charges of equal magnitude but opposite sign, incorrectly claiming that the electric field at the midpoint should be zero because the contributions to the electric field due to the two charges cancel out similar to the magnetic field canceling out in Question 23. Some interviewed students who were asked to justify their claim that the electric field at the midpoint between two equal magnitude charges with opposite sign is zero were reluctant, claiming the result was obvious. Then, the interviewer told them that simply stating that the influence of equal and opposite charges at the midpoint between them must cancel out is not a good explanation and they must explicitly show the direction of the electric field due to each charge and then find the net field. This process turned out to be impossible for some of them but others who drew the electric field due to each charge in the same direction at the midpoint were surprised. One of them who realized that the electric field cannot be zero at the midpoint between the two equal and opposite charges smiled and noted that this fact is so amazing that he will think carefully later on about why the field did not cancel out at the midpoint. There is a question similar to Question 23 on the broad survey of electricity and magnetism CSEM [11]. However, students performed much worse on Question 23 than on the corresponding question on the CSEM (50% for calculus-based courses on post-test for Question 23 versus 63% for the corresponding question on the CSEM). One major difference is that we asked students for the direction of the magnetic field at the midpoint between current carrying wires carrying current in the opposite directions (whereas CSEM asked for the direction of the magnetic field at a point which was not in the middle), and many students had the misconception that the magnetic field is zero at the midpoint. Thus, they gravitated to option (e). In the CSEM, all incorrect choices were quite popular because the misconception specifically targeted by Question 23 about the magnetic field being zero at the midpoint between the wires was not targeted there.

Magnetic field due to current loops

Questions 28–30 investigated student difficulties in determining the magnetic field generated by a current loop. On post-test, the most common misconception amongst calculus-based students for Question 28 was that the magnetic field inside a loop should be zero. Interviews suggest that students confused the magnetic field inside a 2D loop with the electric field inside a 3D sphere with uniform charge. For the same students, on Question 29, the most common incorrect option was option (e) followed by option (a). Many students thought that the magnetic field outside of a loop should be zero or in the same direction of the field inside the loop. On Question 30, the most common distracter was option (a). Interviews and written explanations suggest that, similar to determining the direction of the magnetic field due to two current carrying wires pointing into/out of the page, students were likely to use 'opposites attract' to solve the problem. Some interviewed students incorrectly claimed that two current loops with current flowing in the opposite directions should attract each other, therefore, the magnetic field in between the two loops for that case should add up. These responses based upon naïve intuition suggest that students should be provided guidance to follow systematic approaches to solve even conceptual problems [1].

3D visualization and right hand rule

The performance of many students was closely tied to their ability to apply the right hand rule and three-dimension visualization. In magnetism, there are many questions related to magnetic field and magnetic forces. The students are often given a current carrying wire or moving charge in a magnetic field and they have to determine the magnetic force. Therefore, students must know how to use the right hand rule. For example, Question 21 requires the application of the right hand rule once. However, the results shown in tables A1 and A2 suggest that many students had difficulty with it. In response to Question 21, many students incorrectly chose either the direction opposite to the correct direction or the direction of the current.

The requirement of 3D visualization is also high in magnetism because the directions of magnetic force is always perpendicular to the magnetic field and the direction of motion of the charged particle. In the MCS test, Questions 10–30 all require the ability to visualize in three-dimensions. Question 16 is a good example involving 3D visualization. In this problem, students need to understand that the proton will undergo circular motion in the plane that is perpendicular to the page and simultaneously move along the direction of magnetic field with a constant speed making its overall path helical. Failure to decompose the initial velocity into two components and use the correct sub-velocity to figure out the magnetic force results in incorrect responses.

Performance of upper-level undergraduate physics majors

We also administered the MCS test as a pre-test and post-test to the upper level undergraduate physics majors enrolled in an E&M course which used David Griffith's E&M textbook (typically, taken in second or third year in college). 26 upper-level physics majors took the pretest and 25 took the post-test. Tables A3 and A4 in the

Performance of PhD students

To benchmark the MCS test, we also administered it over two consecutive years to a total of 42 first year physics PhD students who were enrolled in a professional development course for the teaching assistants (TAs). These PhD students were simultaneously enrolled in the first semester of the graduate E&M course. The PhD students were told ahead of time that they would be taking a test related to magnetism concepts. They were asked to take the test seriously, but the actual score on the MCS test did not count for their course grade. The average test score for the PhD students was approximately 83% with the reliability coefficient KR-20 of 0.87 (which is excellent [10]). The better performance of PhD students compared to the undergraduates is statistically significant. The minimum score obtained by a PhD student was 33% and the maximum score obtained was 100% which shows that there is enormous variability in the prior preparation of the first year PhD students. Table A5 in the

Performance by gender

Since previous research suggests that there is often a gender difference in introductory student performance in mathematics as well as physics, which can sometimes be reduced by carefully designed curricula (for example see [40–48]), we investigated gender difference in student understanding of magnetism concepts covered in introductory physics courses by surveying students using the MCS as a pre-test and a post-test before and after instruction in relevant concepts. For analyzing gender difference in students' performance on the MCS, we separate our data into male and female groups. Only the students who provided this gender related information were kept in this analysis. The gender comparison in the algebra-based classes includes 121 females and 110 males (total 231 students) on the pre-test and 106 females and 91 males (total 197) on the post-test. There were 168 females and 403 males (total 571 students) from the regular section (not honors section) of calculus-based classes who took both the pre-test and the post-test and are included in the analysis below. In addition to comparing the results from the algebra-based and regular section of calculus-based classes, we also analyzed the gender data for the post-test only for 95 students enrolled in the honors section of the calculus-based introductory physics course. The honors section students were mainly engineering or physics majors and were not administered the MCS as a pre-test due to time-constraints.

We performed a t-test to investigate the gender differences in the pre-test and post-test MCS data [10]. Our null hypothesis is that there is no statistically significant gender difference between means of different groups on the MCS test. If the p-value obtained from the t-test is less than the significance level of 0.05, the rule of thumb is to conclude that the assumption is false (here such a case would imply that there is a significant difference between the male and female performance) [10].

Table 2 shows the results for the algebra-based course on the pre-test and post-test. It shows that on the pre-test or post-test, there is no statistically significant difference between the average scores of males and females in the algebra-based course (p-value 0.942 and 0.355 in table 2 are both larger than 0.05).

Table 2. Algebra-based course pre-test and post-test average performance on MCS (mean and standard deviation S.D. in percent) by gender. The p-values obtained from t-tests show that neither the pre-test nor the post-test average scores of male and female students are statistically significantly different. The number of students is shown (N).

| Gender | N | Mean | S.D. | p value | |

|---|---|---|---|---|---|

| Pre-test | Male | 91 | 24% | 8% | 0.942 |

| Female | 106 | 24% | 8% | ||

| Post-test | Male | 110 | 44% | 17% | 0.355 |

| Female | 121 | 41% | 19% | ||

Table 3 shows the results for the calculus-based courses on the pre-test and post-test. It shows that on the pre-test in the regular section there is no statistically significant difference but the post-test for both the regular section and honors section shows a statistically significant difference between the average scores of males and females in the calculus-based courses (see p-values in table 3).

Table 3. Calculus-based course average pre-test and post-test performance on MCS (mean and standard deviation S.D. in percent) for regular section and post-test performance for honors section by gender. The p-values obtained from the t-tests show that the pre-test average scores of males and females in the regular section of the course are not statistically significantly different but the post-test average scores of males and females in both the regular and honors sections of the courses are statistically significantly different. The number of students (N) is shown.

| Gender | N | Mean | S.D. | p value | |

|---|---|---|---|---|---|

| Pre-test regular | Male | 403 | 28% | 11% | 0.49 |

| Female | 168 | 26% | 10% | ||

| Post-test regular | Male | 403 | 51% | 21% | 0.019 |

| Female | 168 | 43% | 18% | ||

| Post-test honors | Male | 75 | 58% | 20% | 0.030 |

| Female | 20 | 47% | 21% | ||

To summarize the data presented in tables 2 and 3, for both the algebra- and calculus-based classes, there is no significant difference between the males and females on the pre-test. After traditional instruction, there is still no gender difference in the algebra-based classes. However, a statistically significant difference appeared on the post-test for the calculus-based classes in which there are significantly fewer females in each class than males (for both regular and honors sections).

We investigated students' responses to each MCS question individually to understand how males and females performed on each question. The results are shown in table A6 in the

Answering many of the questions on the MCS correctly requires that students be able to visualize the situation in three-dimensions. For example, some questions require that students apply the right hand rule to figure out the direction of the magnetic field or the force on a moving charge or a current carrying wire. Some prior research suggests that females generally have a better verbal ability but worse spatial ability than males, which can restrict their reasoning in three-dimension and often there is a correlation between students' spatial ability and their self-confidence [42]. The reasons for gender differences are quite complex and beyond the scope of this investigation.

Comparison of algebra-based and calculus-based physics courses

The MCS test investigates students' conceptual understanding of magnetism. It doesn't involve mathematical calculations when solving the conceptual problems posed. One issue we wanted to investigate was whether students from calculus-based courses outperform those from algebra-based courses on the MCS test. Therefore, we compared algebra-based courses and calculus-based courses based on their pre-test and post-test average scores. This comparison includes 267 algebra-based students and 575 calculus-based students on the pre-test and 273 algebra-based students and 575 calculus-based students on the post-test.

Table 4 compares the performance of students in the algebra-based and calculus-based courses on the pre- and post-tests and shows that both on the pre-and post-tests, there is a significant difference between averages for algebra-based courses and calculus-based courses.

Table 4. Algebra-based course versus calculus-based course pre-test and post-test performance on MCS (mean and standard deviation S.D. in percent). The p-values obtained from t-tests show that both the pre-test and post-test average scores of the algebra-and calculus-based courses are statistically significantly different. The number of students (N) is shown.

| Course | N | Mean | S.D. | p value | |

|---|---|---|---|---|---|

| Pre-test | Alg-based | 267 | 24% | 8% | 0.000 |

| Calc-based | 575 | 28% | 11% | ||

| Post-test | Alg-based | 273 | 41% | 18% | 0.01 |

| Calc-based | 575 | 49% | 20% | ||

It is interesting that students in the algebra-based and calculus-based courses perform significantly differently on conceptual questions both before and after instruction. One possible reason may be that calculus-based students' better mathematical and scientific reasoning skills help them in understanding concepts better. Calculus-based students are more likely to build a robust knowledge structure and less likely to have cognitive overload during learning. These issues may contribute to the better performance of calculus-based students.

Comparison of the MCS and CSEM test performances

The CSEM survey aims to very broadly assess students' knowledge of introductory electricity and magnetism [11]. This survey has 32 multiple-choice questions but only has 12 questions focusing on magnetism. We administered the CSEM test to students at Pitt as a pre-test and post-test to investigate how students' performance on the very broad survey compares to their performance on the MCS test focusing in-depth on magnetostaics concepts. The students at Pitt who were administered the CSEM test were from one traditionally taught algebra-based class and four calculus-based introductory classes. In our analysis, for calculus-based classes, we only kept students who took both CSEM and MCS as a pre-test and a post-test. For the algebra-based class, we kept the students who took both the pre-test and the post-test with CSEM. These students might not be the same students who took the MCS as pre-test and post-test because many students who worked on the MCS did not provide their names. Thus, in the algebra-based course, 83 students took the MCS as a pre-test and post-test, and 95 students took the CSEM as a pre-test and post-test. In the calculus-based course, 355 students took the CSEM and MCS as a pre-test and post-test. Also, 26 first year PhD students were administered both the MCS and CSEM tests.

Table 5 shows the performance on the CSEM and MCS tests in the algebra-based and calculus-based courses on the pre-test and the post-test (along with the scores of the PhD students for comparison). The results suggest that algebra-based and calculus-based students performed somewhat better on the CSEM than on the MCS test on the pre-test and post-test. In future research we will investigate whether the better performance of introductory students on CSEM is due to the inclusion of the content of electricity and electromagnetic induction on the CSEM (but not on the MCS) or due to other reasons (e.g., the MCS survey spans a wider variety of questions on magnetostics than the CSEM survey).

Table 5. Overall results for CSEM and MCS tests given both as pre-test and post-test for comparison (average and standard deviation S.D.) along with the number of students (N) in the algebra-based and calculus-based introductory physics courses. The performance of the first year PhD students at the same university (which is significantly better) is also shown for comparison.

| Test | Course | Mean pre-test | S.D. | N | Mean post-test | S.D. | N |

|---|---|---|---|---|---|---|---|

| CSEM | Alg-based | 24% | 8% | 95 | 36% | 13% | 95 |

| Calc-based | 36% | 13% | 355 | 53% | 18% | 355 | |

| PhD | 82% | 13% | 26 | ||||

| MCS | Alg-based | 24% | 8% | 83 | 32% | 15% | 83 |

| Calc-based | 28% | 10% | 355 | 46% | 19% | 355 | |

| PhD | 83% | 17% | 42 | ||||

We also used t-test to compare students' performance in the algebra-based and calculus-based courses on the CSEM test in order to investigate whether the performance on the broader CSEM is similar to that on the MCS test and find that for both the pre-test and post-test (see table 5), the means of the CSEM test scores for the algebra-based and calculus-based courses are statistically significantly different (p values for the t-test are 0.000 for the pre-test and also for the post-test). As noted earlier, one possible reason for students in the algebra-based courses performing significantly worse on these conceptual surveys is that even on a conceptual survey, calculus-based students' mathematical skills and scientific reasoning skills may help them in learning the underlying physics concepts better than students in the algebra-based courses.

Summary

Developing a validated survey on magnetism is important for investigating the extent to which introductory physics students learn relevant concepts after traditional instruction and for assessing innovative curricula and pedagogies that can improve student understanding significantly. We developed and validated a survey on magnetism and administered the validated survey as a pre-test (before instruction) and a post-test (after instruction) in traditionally taught algebra-based and calculus-based introductory physics courses. The survey was developed taking into account the common difficulties of introductory physics students described here. Moreover, we administered the survey to upper-level undergraduates and PhD students to benchmark the survey and found that their performance is typically significantly better on a majority of questions than the students in the algebra-based and calculus-based introductory physics classes for whom the survey is designed. We also compared algebra-based students' performance with calculus-based students and observed a gender difference even on the pre-test which persisted on the post-test. We find no gender difference in the algebra-based courses but a significant gender difference in both the regular and honors sections of calculus-based courses on post-tests (but not on pre-tests). Further research is needed to understand the reasons for these gender differences. A comparison with the base line data on the validated magnetism survey from traditionally taught introductory physics courses and from upper-level undergraduate and PhD students discussed in this paper can help instructors assess the effectiveness of curricula and pedagogies which are especially designed to help students develop a solid grasp of these concepts. In particular, if the average student performance in an introductory physics class is significantly better than the data from the traditionally taught classes presented here, the curricula and pedagogies used in the class can be considered effective.

Acknowledgments

We thank the US National Science Foundation for award DUE 1524575.

Appendix

Table A1. Percentages of algebra-based (alg-based) introductory physics students who selected choice (a)–(e) on questions (1)–(30) on the test. The correct response for each question has been underlined.

| Pre-test Alg-based | Post-test Alg-based | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Item # | a | b | c | d | e | a | b | c | d | e |

| 1 | 3 | 5 | 11 | 69 | 13 | 23 | 2 | 6 | 59 | 10 |

| 2 | 23 | 17 | 59 | 1 | 0 | 28 | 11 | 59 | 0 | 1 |

| 3 | 9 | 70 | 18 | 3 | 0 | 19 | 40 | 39 | 2 | 1 |

| 4 | 71 | 2 | 2 | 20 | 6 | 55 | 3 | 4 | 13 | 25 |

| 5 | 5 | 5 | 2 | 34 | 54 | 36 | 6 | 6 | 10 | 43 |

| 6 | 4 | 29 | 10 | 5 | 51 | 4 | 46 | 6 | 7 | 38 |

| 7 | 14 | 11 | 6 | 7 | 62 | 16 | 8 | 10 | 40 | 26 |

| 8 | 19 | 13 | 15 | 47 | 6 | 7 | 5 | 10 | 75 | 4 |

| 9 | 3 | 21 | 42 | 33 | 0 | 2 | 40 | 36 | 22 | 1 |

| 10 | 19 | 23 | 4 | 46 | 9 | 4 | 3 | 3 | 47 | 43 |

| 11 | 21 | 2 | 8 | 27 | 42 | 13 | 4 | 31 | 20 | 32 |

| 12 | 27 | 18 | 22 | 12 | 21 | 19 | 27 | 19 | 21 | 15 |

| 13 | 3 | 6 | 64 | 6 | 20 | 34 | 12 | 26 | 12 | 16 |

| 14 | 14 | 29 | 6 | 18 | 33 | 9 | 50 | 6 | 12 | 24 |

| 15 | 32 | 24 | 18 | 7 | 19 | 24 | 10 | 28 | 15 | 24 |

| 16 | 25 | 22 | 39 | 11 | 3 | 15 | 31 | 42 | 4 | 9 |

| 17 | 29 | 22 | 24 | 11 | 14 | 32 | 11 | 9 | 43 | 6 |

| 18 | 8 | 11 | 45 | 23 | 14 | 5 | 13 | 35 | 14 | 33 |

| 19 | 16 | 40 | 29 | 7 | 9 | 29 | 18 | 8 | 20 | 26 |

| 20 | 26 | 26 | 18 | 21 | 10 | 21 | 28 | 17 | 24 | 10 |

| 21 | 30 | 9 | 7 | 42 | 12 | 11 | 16 | 49 | 20 | 4 |

| 22 | 28 | 14 | 23 | 23 | 13 | 4 | 9 | 59 | 22 | 7 |

| 23 | 5 | 6 | 8 | 5 | 76 | 2 | 4 | 36 | 7 | 50 |

| 24 | 13 | 26 | 39 | 13 | 10 | 6 | 49 | 25 | 11 | 9 |

| 25 | 35 | 18 | 31 | 8 | 8 | 10 | 36 | 28 | 10 | 17 |

| 26 | 11 | 42 | 27 | 9 | 10 | 28 | 21 | 8 | 16 | 28 |

| 27 | 19 | 18 | 23 | 9 | 30 | 35 | 24 | 17 | 11 | 12 |

| 28 | 27 | 18 | 37 | 10 | 8 | 69 | 17 | 8 | 2 | 4 |

| 29 | 12 | 17 | 24 | 36 | 11 | 14 | 62 | 8 | 4 | 13 |

| 30 | 22 | 48 | 14 | 12 | 3 | 28 | 52 | 10 | 9 | 0 |

Table A2. Percentages of calculus-based (calc-based) introductory physics students who selected choice (a)–(e) on questions (1)–(30) on the test. The correct response for each question has been underlined.

| Pre-test Calc-based | Post-test Calc-based | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Item # | a | b | c | d | e | a | b | c | d | e |

| 1 | 11 | 2 | 8 | 67 | 12 | 2 | 2 | 5 | 78 | 13 |

| 2 | 23 | 12 | 64 | 1 | 1 | 12 | 8 | 80 | 1 | 0 |

| 3 | 10 | 52 | 36 | 1 | 0 | 5 | 40 | 55 | 0 | 0 |

| 4 | 53 | 8 | 4 | 24 | 12 | 71 | 3 | 2 | 17 | 6 |

| 5 | 10 | 6 | 5 | 24 | 54 | 40 | 4 | 4 | 11 | 42 |

| 6 | 5 | 22 | 8 | 5 | 59 | 3 | 38 | 5 | 5 | 49 |

| 7 | 11 | 16 | 11 | 11 | 51 | 3 | 3 | 19 | 35 | 40 |

| 8 | 14 | 20 | 8 | 49 | 8 | 7 | 8 | 6 | 77 | 2 |

| 9 | 2 | 36 | 25 | 35 | 2 | 2 | 61 | 20 | 16 | 2 |

| 10 | 18 | 9 | 3 | 49 | 21 | 6 | 3 | 3 | 55 | 32 |

| 11 | 23 | 5 | 13 | 27 | 32 | 17 | 3 | 40 | 19 | 21 |

| 12 | 24 | 21 | 20 | 17 | 19 | 17 | 24 | 16 | 33 | 11 |

| 13 | 13 | 15 | 56 | 8 | 9 | 59 | 3 | 24 | 9 | 6 |

| 14 | 11 | 29 | 4 | 15 | 41 | 5 | 46 | 3 | 9 | 37 |

| 15 | 32 | 24 | 17 | 11 | 16 | 19 | 13 | 24 | 17 | 27 |

| 16 | 29 | 25 | 35 | 7 | 4 | 45 | 19 | 31 | 3 | 3 |

| 17 | 35 | 18 | 23 | 14 | 10 | 28 | 11 | 14 | 44 | 3 |

| 18 | 11 | 8 | 50 | 14 | 17 | 4 | 9 | 26 | 10 | 51 |

| 19 | 16 | 33 | 28 | 11 | 12 | 19 | 24 | 10 | 20 | 27 |

| 20 | 23 | 24 | 18 | 25 | 11 | 16 | 34 | 17 | 26 | 7 |

| 21 | 22 | 19 | 17 | 29 | 13 | 11 | 22 | 54 | 8 | 6 |

| 22 | 17 | 11 | 34 | 30 | 8 | 4 | 4 | 60 | 29 | 4 |

| 23 | 8 | 9 | 16 | 6 | 61 | 2 | 2 | 50 | 6 | 41 |

| 24 | 10 | 30 | 41 | 13 | 7 | 6 | 47 | 33 | 10 | 5 |

| 25 | 26 | 20 | 38 | 9 | 7 | 7 | 25 | 35 | 12 | 21 |

| 26 | 15 | 39 | 25 | 8 | 12 | 20 | 27 | 7 | 16 | 30 |

| 27 | 22 | 23 | 21 | 15 | 20 | 44 | 21 | 12 | 10 | 14 |

| 28 | 36 | 16 | 20 | 9 | 19 | 70 | 9 | 5 | 2 | 14 |

| 29 | 19 | 26 | 23 | 22 | 11 | 17 | 52 | 6 | 5 | 21 |

| 30 | 22 | 40 | 20 | 15 | 4 | 28 | 54 | 10 | 8 | 1 |

Table A3. Percentages of physics majors in the upper-level undergraduate E&M course who selected choice (a)—(e) on questions (1)–(30) on the pre-test. The correct response for each question has been underlined.

| Upper-level undergraduates | Upper-level undergraduates | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Item # | a | b | c | d | e | Item # | a | b | c | d | e |

| 1 | 0 | 0 | 0 | 73 | 27 | 16 | 50 | 23 | 23 | 4 | 0 |

| 2 | 4 | 4 | 92 | 0 | 0 | 17 | 42 | 8 | 8 | 35 | 8 |

| 3 | 4 | 46 | 50 | 0 | 0 | 18 | 8 | 8 | 15 | 4 | 65 |

| 4 | 58 | 4 | 0 | 35 | 4 | 19 | 4 | 35 | 8 | 8 | 46 |

| 5 | 42 | 0 | 8 | 8 | 42 | 20 | 15 | 54 | 12 | 15 | 4 |

| 6 | 8 | 46 | 0 | 0 | 46 | 21 | 12 | 39 | 39 | 8 | 4 |

| 7 | 0 | 4 | 39 | 27 | 31 | 22 | 0 | 4 | 69 | 23 | 4 |

| 8 | 4 | 4 | 0 | 89 | 4 | 23 | 0 | 0 | 19 | 0 | 81 |

| 9 | 4 | 50 | 27 | 19 | 0 | 24 | 4 | 42 | 39 | 8 | 8 |

| 10 | 8 | 0 | 4 | 77 | 12 | 25 | 8 | 39 | 27 | 4 | 23 |

| 11 | 15 | 4 | 39 | 27 | 15 | 26 | 4 | 19 | 12 | 8 | 58 |

| 12 | 8 | 12 | 27 | 27 | 27 | 27 | 47 | 27 | 12 | 0 | 15 |

| 13 | 50 | 0 | 35 | 15 | 0 | 28 | 85 | 15 | 0 | 0 | 0 |

| 14 | 4 | 31 | 0 | 12 | 54 | 29 | 23 | 58 | 0 | 0 | 19 |

| 15 | 8 | 23 | 15 | 12 | 42 | 30 | 35 | 65 | 0 | 0 | 0 |

Table A4. Percentages of physics majors in the upper-level undergraduate E&M course who selected choice (a)–(e) on questions (1)–(30) on the post-test. The correct response for each question has been underlined.

| Upper-level undergraduates | Upper-level undergraduates | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Item # | a | b | c | d | e | Item # | a | b | c | d | e |

| 1 | 0 | 0 | 4 | 76 | 20 | 16 | 52 | 24 | 24 | 0 | 0 |

| 2 | 0 | 4 | 92 | 4 | 0 | 17 | 33 | 12 | 4 | 50 | 0 |

| 3 | 4 | 44 | 52 | 0 | 0 | 18 | 4 | 4 | 12 | 12 | 68 |

| 4 | 68 | 0 | 0 | 32 | 0 | 19 | 20 | 16 | 8 | 12 | 44 |

| 5 | 56 | 0 | 0 | 16 | 28 | 20 | 8 | 40 | 8 | 32 | 12 |

| 6 | 0 | 52 | 0 | 4 | 44 | 21 | 8 | 16 | 76 | 0 | 0 |

| 7 | 4 | 0 | 12 | 72 | 12 | 22 | 0 | 0 | 68 | 28 | 4 |

| 8 | 0 | 4 | 4 | 92 | 0 | 23 | 0 | 0 | 52 | 0 | 48 |

| 9 | 0 | 60 | 24 | 16 | 0 | 24 | 0 | 36 | 44 | 20 | 0 |

| 10 | 4 | 0 | 0 | 52 | 44 | 25 | 0 | 16 | 32 | 8 | 44 |

| 11 | 8 | 0 | 80 | 4 | 8 | 26 | 12 | 28 | 0 | 8 | 52 |

| 12 | 4 | 8 | 16 | 28 | 44 | 27 | 56 | 12 | 12 | 0 | 20 |

| 13 | 60 | 4 | 24 | 4 | 8 | 28 | 84 | 16 | 0 | 0 | 0 |

| 14 | 0 | 36 | 0 | 8 | 56 | 29 | 4 | 76 | 0 | 0 | 20 |

| 15 | 24 | 8 | 24 | 12 | 32 | 30 | 36 | 56 | 0 | 8 | 0 |

Table A5. Percentages of first year physics PhD students who selected choice (a)–(e) on questions (1)–(30) on the MCS test. The correct response for each question has been underlined.

| PhD students | PhD students | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Item | a | b | c | d | e | Item | a | b | c | d | e |

| 1 | 0 | 2 | 5 | 83 | 10 | 16 | 74 | 12 | 14 | 0 | 0 |

| 2 | 2 | 0 | 98 | 0 | 0 | 17 | 12 | 0 | 10 | 79 | 0 |

| 3 | 0 | 5 | 95 | 0 | 0 | 18 | 0 | 2 | 2 | 2 | 93 |

| 4 | 81 | 0 | 0 | 17 | 2 | 19 | 2 | 5 | 2 | 0 | 91 |

| 5 | 93 | 0 | 2 | 0 | 5 | 20 | 5 | 60 | 10 | 21 | 5 |

| 6 | 0 | 91 | 5 | 2 | 2 | 21 | 0 | 24 | 74 | 0 | 2 |

| 7 | 0 | 0 | 14 | 71 | 14 | 22 | 0 | 0 | 74 | 26 | 0 |

| 8 | 0 | 2 | 2 | 95 | 0 | 23 | 2 | 0 | 74 | 5 | 19 |

| 9 | 0 | 93 | 5 | 2 | 0 | 24 | 0 | 86 | 10 | 5 | 0 |

| 10 | 0 | 2 | 2 | 81 | 14 | 25 | 2 | 12 | 14 | 2 | 69 |

| 11 | 7 | 0 | 88 | 5 | 0 | 26 | 2 | 10 | 0 | 2 | 86 |

| 12 | 2 | 2 | 2 | 88 | 5 | 27 | 74 | 16 | 0 | 7 | 2 |

| 13 | 95 | 0 | 0 | 0 | 5 | 28 | 91 | 2 | 0 | 0 | 7 |

| 14 | 0 | 14 | 0 | 0 | 86 | 29 | 5 | 76 | 0 | 2 | 17 |

| 15 | 19 | 2 | 2 | 0 | 76 | 30 | 10 | 83 | 2 | 5 | 0 |

Table A6. Percentages of correct response on each item on MCS by gender (F for female and M for male students) in the algebra-based (alg) and regular section of the calculus-based (calc) introductory physics courses on the post-test.

| Item | Alg-M | Alg-F | Calc-M | Calc-F |

|---|---|---|---|---|

| 1 | 61 | 55 | 79 | 73 |

| 2 | 62 | 55 | 82 | 74 |

| 3 | 40 | 39 | 56 | 53 |

| 4 | 59 | 50 | 73 | 67 |

| 5 | 36 | 43 | 42 | 38 |

| 6 | 49 | 50 | 38 | 39 |

| 7 | 46 | 42 | 38 | 28 |

| 8 | 79 | 74 | 80 | 71 |

| 9 | 44 | 40 | 62 | 56 |

| 10 | 48 | 41 | 57 | 50 |

| 11 | 36 | 32 | 41 | 37 |

| 12 | 28 | 20 | 35 | 26 |

| 13 | 37 | 32 | 61 | 53 |

| 14 | 25 | 18 | 42 | 24 |

| 15 | 25 | 26 | 28 | 23 |

| 16 | 21 | 8 | 45 | 43 |

| 17 | 41 | 54 | 42 | 48 |

| 18 | 44 | 30 | 56 | 38 |

| 19 | 33 | 25 | 30 | 21 |

| 20 | 25 | 31 | 36 | 29 |

| 21 | 51 | 55 | 54 | 51 |

| 22 | 64 | 52 | 63 | 51 |

| 23 | 39 | 32 | 54 | 39 |

| 24 | 55 | 46 | 50 | 38 |

| 25 | 18 | 20 | 24 | 14 |

| 26 | 28 | 32 | 33 | 23 |

| 27 | 37 | 35 | 47 | 36 |

| 28 | 72 | 69 | 72 | 63 |

| 29 | 66 | 67 | 54 | 46 |

| 30 | 49 | 55 | 54 | 52 |